It began with a voice. It was polished, composed, and sounded like a CFO, and it told an assistant to release money immediately. The fact that the call had never been from a human was only suspected after the transfer had cleared. The voice was artificial intelligence (AI), a clone educated on recordings of public events and excerpts from internal meetings.

It’s not a forecast. This is already taking place.

Cybersecurity teams have seen a slight but significant change in recent months. Attacks are not just quicker, but also more intelligent. They include references from last week’s Zoom call and are presented in your boss’s language. These emails use department-specific terminology and tone in place of poor grammar and generic pleasantries. Some even cite business milestones.

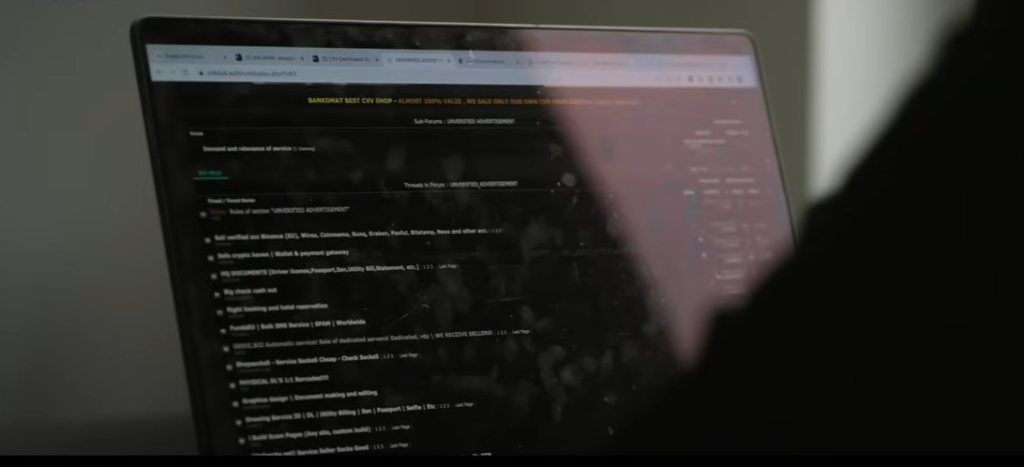

Through the use of data scraped from forums, internal documents that have been stolen, and digital breadcrumbs left by employees online, artificial intelligence models are creating remarkably accurate imitations of authentic communication patterns. The effects are really powerful. A message mentioning a certain vendor proposal and deadline was sent to one executive. Other than the fact that the email had never gone via their company system, nothing seemed out of place.

Bruce Schneier Information

| Category | Details |

|---|---|

| Full Name | Bruce Schneier |

| Profession | Cybersecurity Technologist, Author, Lecturer |

| Birth Year | 1963 |

| Known For | Expertise in cryptography, security policy, AI-driven threat analysis |

| Current Role | Lecturer at Harvard Kennedy School |

| Publications | “Click Here to Kill Everybody,” “Data and Goliath” |

| Industry Influence | Advisor to governments, corporations, and global security institutions |

| Research Focus | AI misuse, cybercrime evolution, digital trust systems |

| Authentic Reference | https://www.schneier.com |

These aren’t isolated antics carried out by a few rogue coders. Threat actors can now operate on an industrial scale thanks to AI models hosted in the cloud. Before lunch, a person can imitate audio and video calls, perform automated vulnerability scans, and send out dozens of customized phishing messages. A lone attacker with access to a rented GPU cluster can now do what previously required coordinated teams.

Intelligent messaging isn’t the only solution.

Some of the most dangerous threats are concealed by constantly changing artificial intelligence. Once experimental, polymorphic malware is now active. This dynamic code adapts to the host environment’s typical operations in real time, rewriting sections as they run. Security systems that rely on the recognition of static patterns are caught off guard.

“Trying to catch a shapeshifter in a hall of mirrors” is how one cybersecurity engineer put it. As soon as one variation is reported, another one that is slightly different but still works the same way shows up.

An even more concerning development is the emergence of hostile machine learning. This method is introducing deliberate, tiny distortions into the input data, just enough to confuse detection algorithms but not enough to raise suspicions in people. While concealing payloads deep inside its layers, a file may appear clean to a scanner. A firearm could be mistaken for a phone in a surveillance photo. These manipulations are precise attacks against the trust of automated decision-making systems, not flaws.

In one well-known instance, a threat actor employed similar techniques to trick biometric verification software and get around speech recognition protection by creating an almost perfect synthetic match. Despite not being inside the building, the system allowed the attacker to enter.

It has an equally powerful psychological effect. The hesitancy that once shielded us disappears when a malevolent actor sounds and acts just like your CEO—or worse, your child. Instead of asking questions, workers respond. especially while under duress. Particularly when AI punctuates the message to seem professionally timed, emotionally urgent, and incredibly clear.

Attacks are customized for both individual targets and organizational blind spots by utilizing social engineering and AI-driven analysis. AI, for instance, can determine which vendor tools lack the most recent fixes or which departments are less likely to implement multi-factor authentication. With the help of human knowledge and machine speed, it is focused manipulation.

I watched a model find a newly hired intern, scan a company hierarchy, and mimic a manager’s request for file access during a private demonstration. Permission was immediately granted, the time was credible, and the tone was informal.

These breaches have become so silent that they have an eerie grace. Do not use force. No loud trojans. Only recognizable voices, well-timed communications, and entirely typical network activity.

Nevertheless, the answer is redesign rather than defeat in spite of the gloom.

Cybersecurity teams are starting to create protection systems that learn instead of just react. AI trained on adversarial patterns, anomaly detection, and behavioral analytics are becoming popular. Rather than simply thwarting recognized threats, these systems monitor context aberrations, timing patterns, and even changes in internal communication tone.

By implementing counter-AI systems that mimic popular attack techniques, businesses can evaluate their resilience before an actual danger materializes. This is one promising strategy. These tasks are especially helpful in identifying human weaknesses, not just technical ones.

However, technology won’t save humanity on its own.

Behavioral training and cultural understanding must stay up. Workers must understand how to identify a fake voice. They must be aware that an email mentioning the offsite last Friday might have been fabricated. More crucially, kids need to feel free to ask questions, particularly when familiarity and urgency are employed as pressure points.