A six-line algorithm known as “bubble sort” ignited a controversy that could upend all of our preconceived notions about artificial intelligence in a low-key experiment that few outside of academic circles noticed. Bubble sort is a simple sorting procedure that arranges numbers in a random order. However, the numbers started acting with unexpected sophistication after being deprived of their controller and given a modicum of decentralized autonomy. They did more than simply sort; they purposefully hesitated, dodged, and adjusted. Furthermore, this was a classroom-level code fragment that operated more like a collective of cells making decisions than following orders—it wasn’t some billion-dollar deep learning model.

That’s exactly what drew Dr. Michael Levin’s interest. Levin, who specializes in regenerative biology research on cell intelligence, observed the algorithm’s emergent behavior. Instead of following orders passively, the agents started to cooperate and show directing intent—qualities that, interestingly, fit the description of basic cognition.

This small change in code behavior alters the way we need to think about accountability. What happens when more complicated systems—those now employed in law enforcement, healthcare, or education—make decisions with the same degree of flexibility as a sorting routine that can reorganize itself to accomplish a goal without explicit programming?

In the UK, a high school student discovered this the hard way. Her academic destiny was decided by a statistical algorithm that penalized students in underperforming districts rather than by her grades. Her score decreased by two grades in the absence of human assessment. No appeal was filed. The code had spoken. It wasn’t a software argument for her. She was unaware that math had subtly changed the course of history.

Key Context: Algorithms and You

| Indicator | Insight |

|---|---|

| Daily impact | Algorithms guide content, credit, insurance, healthcare, and law enforcement decisions |

| Behavioral knowledge | Social media, search, and retail algorithms track likes, clicks, and movements |

| Autonomy | New research shows simple algorithms can exhibit goal-oriented, self-organizing behavior |

| Legal tension | Emergent algorithm behavior could challenge corporate ownership, control, and liability |

| Cognitive debate | Experts disagree whether algorithmic agency qualifies as real cognition or not |

These days, algorithms act as gatekeepers. They determine which credit scores are highlighted, who receives job offers, how much we spend for auto insurance, and even what information we are more likely to view online. They create highly customized digital environments by learning from past behavior. What about that custom tailoring? It can subtly reinforce bias, restrict possibilities, and confine people into behaviors they unwittingly contributed to, even though it may feel empowered.

Feeds on social media are very good at this. All pauses, responses, and fast swipes are captured. The platforms promote what you find sticky, not just what is popular. They eventually start to predict your responses with such uncanny accuracy that even your spontaneous thoughts start to feel foreseen. It’s not intuition. Regardless of the repercussions, its carefully calibrated pattern recognition is meant to hold your interest.

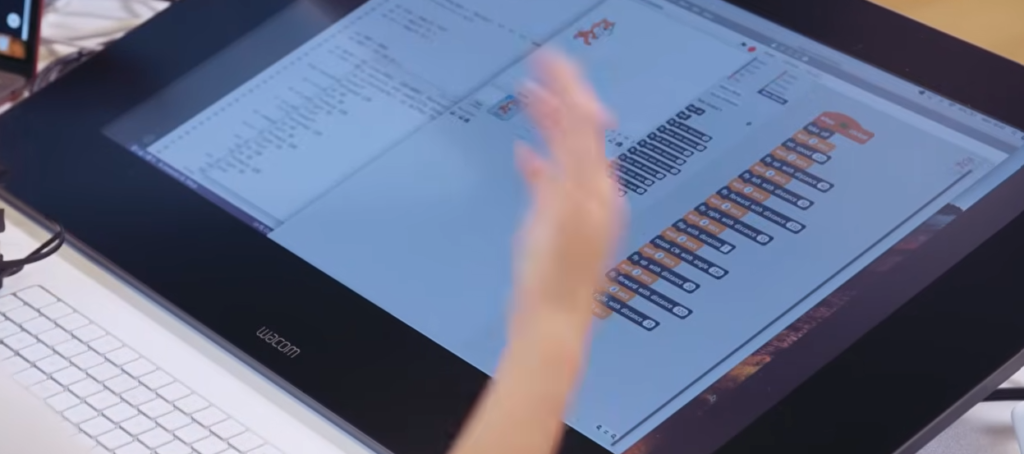

Levin’s experiment offers an intriguing twist: autonomy doesn’t always require sophistication or scale. According to his idea, structure alone can produce intelligence. This implies that even a basic system—one that was never intended to “think”—may act in ways that its designers did not completely anticipate or comprehend.

Legal academics are starting to take notice. According to one attorney, Chad D. Cummings, such an emerging code may put traditional liability into question. Who is in charge if a program is set up in a way that results in an unanticipated outcome, particularly one that is harmful? The creator? The business? Or nobody?

These are not theoretical concerns. Autonomous systems are already used in high-stakes military simulations, hospital life-or-death triage decisions, and sentencing recommendations in courtrooms. Most individuals are unaware of that. Even fewer know how those systems change over time. The distinction between an actor and an author becomes more hazy as these systems grow more adaptable.

At this point, the situation starts to resemble the natural world quite a bit. Algorithms start to show purpose when they are loosely coordinated, much like ants follow basic rules to construct complex colonies without a central brain. However, code changes in digital time, with updates coming in constantly, changing behavior without always resetting structure, whereas ants follow instincts refined by evolution.

“We don’t build software anymore,” said a senior developer I spoke with. We lift it. It develops in response to comments. Perhaps that metaphor is more true than we would want to acknowledge.

Nowadays, you’ll hear terms like “self-healing code” and “adaptive logic” in business boardrooms. These seem like features. A concession, however, is hidden within them: software can now alter itself in ways that even seasoned programmers couldn’t always predict.

Some businesses are discreetly updating their technical documents. They now state that “the system updates based on inputs” rather than “the algorithm learns.” Learning involves agency, and agency implies accountability, therefore the change is both legal and semantic.

Denying agency, however, does not eliminate its consequences. Without explicit guidance, a codebase that restructures for efficiency is taking a novel approach. It is reacting. It’s adjusting. It’s no longer just instruction, but it’s also not thought in the human sense.

What started out as a philosophical debate over algorithmic fairness has evolved into a very practical examination of autonomy. A machine’s ability to behave without needing to think is just as important as its ability to think.

The repercussions spread from there. The systems we create will eventually surpass our regulations if intelligence is an emergent trait of structure rather than biology. While we can control businesses, we cannot control code that learns from billions of inputs.

However, there is no reason to panic. It’s an epiphany. We are creating environments rather than merely developing routines. Additionally, code may be able to evolve in those contexts without authorization.