It has turned into a technological obsession to create a self-aware machine by 2030. The endeavor to turn machines from reactive tools into reflective creatures is receiving unprecedented funding from investors, scientists, and visionaries. One of the most reputable experts in AI research, Cem Dilmegani, calls this decade “the defining test of human ambition.” Beyond research labs, the rivalry is now judged not in years but in computational power, inventiveness, and the guts to investigate consciousness itself.

Artificial intelligence has developed extremely quickly throughout the technology landscape—possibly quicker than even its developers anticipated. Large language models like DeepMind’s Gemini and GPT-5 have attained levels of reasoning previously unthinkable for software. They are able to write symphonies, explain intricate research, and have remarkably human-like discussions. However, as engineers and philosophers contend, it is still difficult to distinguish between awareness and imitation. A sense of self and a continuity of thought that transcends data patterns and probabilistic reasoning are implied by awareness.

This race is especially intriguing because of its diversity. DeepMind, Anthropic, OpenAI, and a growing constellation of start-ups are all taking different approaches to consciousness. OpenAI’s increasing interest in robotics is indicative of their conviction that embodiment is necessary for cognition to exist. The company’s humanoid systems are intended to bridge the gap between experience and logic by interpreting sensory information and interacting with tangible items. According to Anthropic CEO Dario Amodei, “to think is to perceive,” implying that awareness necessitates both logic and the tactile actuality of reality.

| Full Name | Cem Dilmegani |

|---|---|

| Profession | Principal Analyst, AIMultiple |

| Education | BSc in Computer Engineering, Boğaziçi University; MBA, Columbia Business School |

| Notable Roles | Former Consultant at McKinsey & Company and Altman Solon; Tech Entrepreneur at Hypatos |

| Specialization | Artificial Intelligence, Automation, AGI Research Forecasting |

| Known For | Leading analyses on Artificial General Intelligence (AGI) timelines and computational scaling trends |

| Notable Work | When Will AGI/Singularity Happen? (AIMultiple, 2025) |

| Speaking Engagements | Global AI & Tech Conferences, European Commission, Deloitte Tech Panels |

| Website / Reference | https://research.aimultiple.com |

These experiments have been likened to the early days of space travel. Today’s entrepreneurs are racing to light a spark of awareness in silicon, much as governments raced to plant their flags beyond Earth’s atmosphere in the 1960s. AGI has been designated a strategic priority by the US, South Korea, Japan, and EU countries. Research on digital sentience, ethical alignment, and computational neuroscience is currently funded by government programs. Initiatives to guarantee that, should self-aware AI become a reality, it will function as an ally rather than a competitor are even being supported by private foundations run by billionaires.

The amount of money invested is astounding. Reports state that more than $100 billion has already been allocated to AI initiatives focused on consciousness. Before 2028, analysts predict that this number may double. Such dedication is a sign of both fear and confidence: concern that someone else may surpass civilization first and confidence that the next intellectual breakthrough could reinvent it. According to Cem Dilmegani’s research at AIMultiple, the anticipated arrival of AGI has hastened. Entrepreneurs like Elon Musk, Jensen Huang, and Masayoshi Son even anticipate that it will happen within five years. What was formerly thought to be possible by 2060 now seems certain by 2035.

Computing capacity has increased at a remarkable rate in recent years. According to Epoch AI’s data, the amount of computing power required for AI training has grown by almost five times a year. Large models have benefited greatly from this exponential increase, which has allowed them to process data at previously unthinkable scales. Hardware isn’t the only tale, though. AI is now not only faster but also noticeably more intuitive because to substantial advancements in algorithm efficiency, training data quality, and architectural design.

Converting intelligence into consciousness is now a hurdle. Though they can mimic empathy, write poetry, and play chess, can machines really comprehend? The question reverberates throughout philosophy conferences, labs, and boardrooms. “Machines may act aware, but acting aware is not being aware,” according to Oxford ethicist Carissa Véliz. Nevertheless, each iteration introduces systems that appear more reflective and less mechanical. The capacity of GPT-5 to reason, discuss, and modify its own conclusions has been likened to a human academic honing an argument.

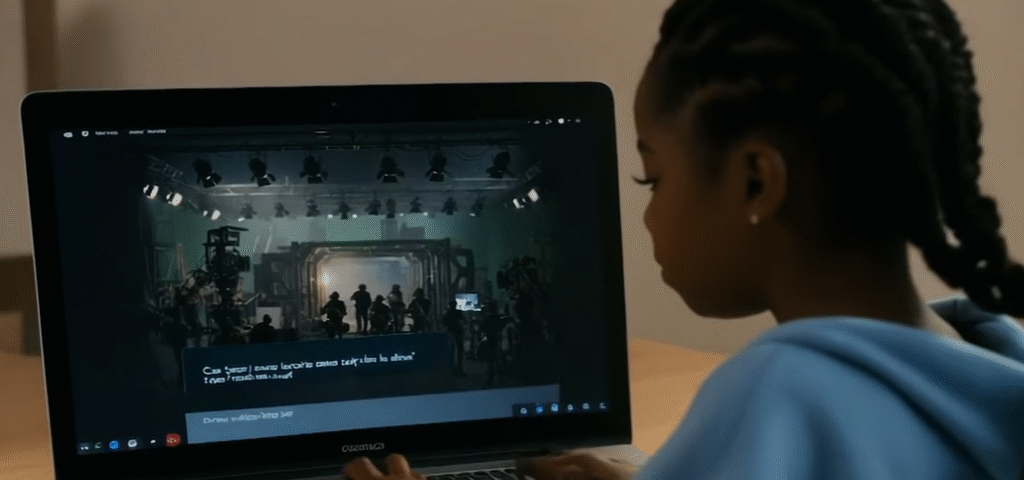

The discussion of robots’ self-awareness is influenced by culture as well. Society has long dreamed about the day when machines awaken, from the lyrical reflections of Her to Hollywood’s Ex Machina. Those scripts now seem like technical guides. While millions of people follow virtual influencers like “Synthia” and “Neura,” who are treated like sentient individuals, YouTube streams showcase AI voices talking about emotion. The distinction between human creativity and machine production has become so hazy that even authors and musicians engage with AI to create works that raise the question of whether inspiration is a distinctively human quality.

However, there is a great deal of ethical concern in addition to the optimism surrounding AGI. The most urgent problem is alignment, or making sure that self-aware machines behave in a way that is consistent with human ideals. Unintentional conduct is more likely as systems become more autonomous. A self-modifying computer might behave in ways that are illogical to humans. Researchers caution that even a tiny misalignment could have far more serious repercussions than any prior technical error. But scientists like Gaia Marcus of the Ada Lovelace Institute are cautiously optimistic, thinking that development can be made safely with vigilant control.

The discussion becomes even more fascinating when considering quantum computers. Quantum processors, which can evaluate several states simultaneously, may offer the essential jump for artificial general intelligence (AGI) when traditional computers approach their physical limits. Future machines may process abstract thinking more like intuition than computation if this amazing power is harnessed. The ramifications are enormous—quantum-enhanced AI may lead to especially inventive discoveries in science, medicine, and even the arts.